Self-driving cars are coming. Uber already has them on the road. Google’s smart car has driven over 2 million miles. There is no doubt that there will be a positive economic and societal impact with these vehicles, however, there is a question of morality.

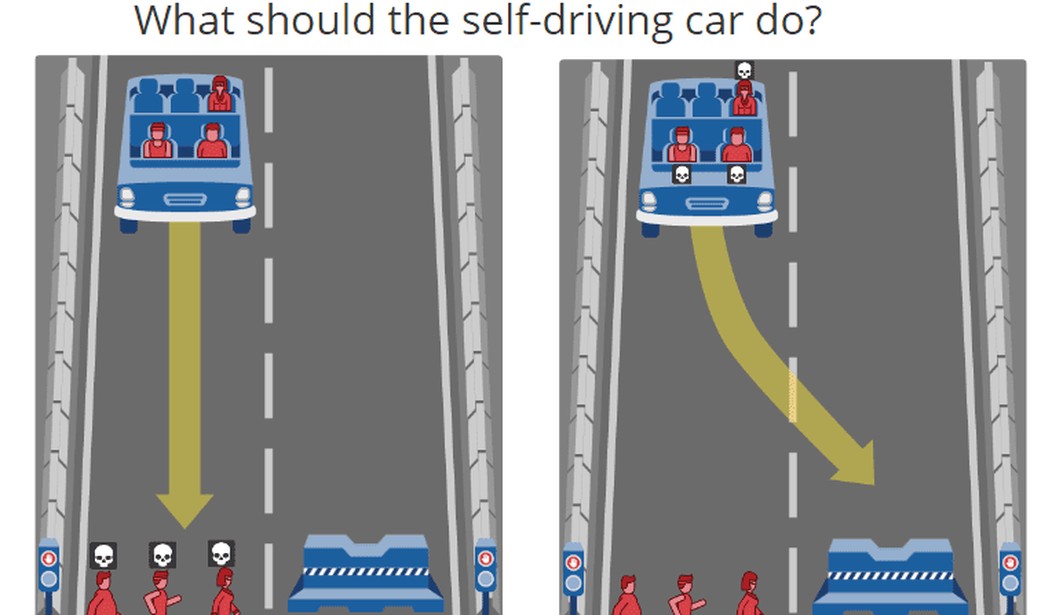

Here is a scenario: The self-driving car is going down a steep hill. The brakes give out. There is a pedestrian crossing the street who would surely be seriously injured or killed if the car keeps going straight. However, if the car swerves to miss the pedestrian then the car will go in the ditch and would certainly seriously injure or kill the passenger. What is the correct moral decision the smart car should make?

This question can be more complicated. What if it was a mother and child crossing the street? What if the pedestrian is illegally crossing? What if it was your family in the car and a different family crossing the street?

Researchers at the Massachusetts Institute of Technology asked these very questions … and many more. They created a simple test on a website called the “Moral Machine.” Here you are asked who lives and who dies in certain situations. You are asked to judge 13 situations. At the end, you will be given a chart of your decisions. What type of people do you give preference to? Male/female, young/old, or wealthy/poor. Thrown into the mix are dogs, cats, the homeless, and criminals. You may be surprised about some hidden biases you have and how you relate to others who took the test.

Here is a perfect example of a complicated situation: Should three people die because four people are crossing the street illegally? Or should four people die because the other three are following the law?

Take the test or design your own scenarios here. You will find there are no easy answers, which will leave you wondering … what are we going to program the cars to do?

Join the conversation as a VIP Member