There’s genuine danger from AI, but not in the form that the public thinks. The idea of a single, sentient Skynet that will take over the world is probably not feasible. Facebook Meta’s new AI chatbot BlenderBot 3 made minor news when it called Mark Zuckerberg ‘creepy and manipulative.’ How could a product turn against its owner? Because it learns by observing others. The power of modern AI lies in its ability to find patterns in masses of data. If BlenderBot trained on human datasets, then it would reflect the fact that many people perceived Zuckerberg as ‘creepy’ rather than reaching that conclusion from logic built into it.

As many as there are different datasets, there will be different inferences. Turing machines that use deep learning feed off the new patterns it discovers in reality. It does not, it cannot, produce the full efflorescence of the universe from a set of limited axioms, but adds a new proposition from without, as expounded by Godel, or by resort to an Oracle, as explained in Part One.

AI, therefore, needs a constant and staggering intake of data to retain its superhuman competence. Without an outside source of surprise, the AI engine would soon become stale. It is this necessary connection to external phenomena that is the big limit to the world-dominant AI of dystopian sci-fi. As datasets become larger, they throttle the data exchange between AI processor and that which it is trying to observe/control. As its span expands and observational detail grows, the world becomes less and less real-time with respect to any would-be Skynet.

With signals limited by the speed of light — about nine inches per nanosecond — a Skynet’s ability to measure the instantaneous state of a densely sampled complex system at distance is progressively restricted. The farther out an AI reaches, the more it must delegate to autonomous subsidiaries to deal with events too many nanoseconds away. Like the British Admiralty in the Age of Sail, it must decide which events can be left to a subordinate initiative, and which must be reserved to itself.

Even if it tried to control everything from the center the required connections would soon exhaust all the bandwidth available. The proximity of AI inference to the dataset becomes a critical factor where instant action is required, most obviously in self-driving vehicles, where AI must be local, not dependent on some remote server. This would tend to create a world of multiple competing and cooperating AIs, with data domains describing the area of dominance of each.

The other factor militating against a unitary, planet-wide AI is power requirements. “As AI becomes more complex, expect some models to use even more data. That’s a problem because data centers use an incredible amount of energy,” says an article in TechTarget.

…the largest data centers require more than 100 megawatts of power capacity, which is enough to power some 80,000 U.S. households, according to energy and climate think tank Energy Innovation. With about 600 hyperscale data centers — data centers that exceed 5,000 servers and 10,000 square feet — in the world, it’s unclear how much energy is required to store all of our data, but the number is likely staggering.

Recently the media has discovered that AI has an enormous carbon footprint and is perplexed over how to deal with it. As George Gilder noted, when a supercomputer defeats man at Chess or Go, the man is only using 12 to 14 watts of biological power, while the computer and its networks are using rivers of electricity. If indeed a single AI managed to become sentient and rule the world it might well divert every available power source to the task of expanding its own intelligence, leaving obsolete meat-based humanity to fend for itself. (This nightmare scenario is called perverse instantiation: “a type of malignant AI failure mode involving the satisfaction of an AI’s goals in ways contrary to the intentions of those who programmed it.”)

Latency limits, network density, and power requirements may explain why nature opted for billions of intelligent beings whose individual brains needed only a flashlight bulb’s power instead of a planetary-sized superbrain requiring untold electricity. It’s not unreasonable that developments in machine intelligence will follow nature’s evolutionary path. Instead of a single Skynet, we are likely to see multiple artificial intelligences.

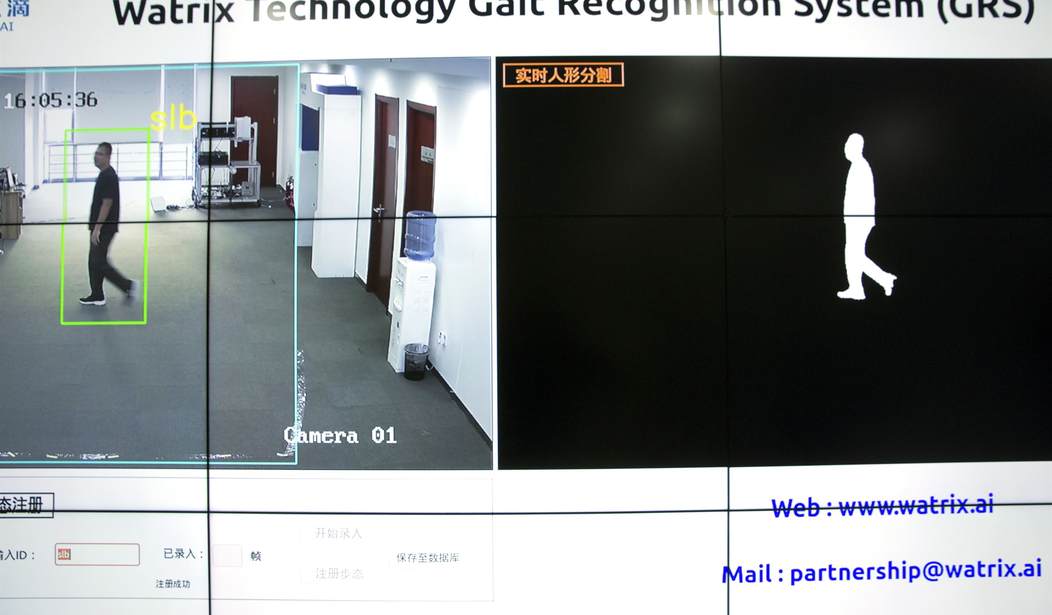

One can already empirically observe the rise of rival AI coalitions, most notably in America and China. Forbes recently noted, “Global surveillance is increasing, with governments requesting almost 40 per cent more user data from Apple, Google, Facebook, and Microsoft during 2020 than in the year before.” For its part, China famously has a social credit system, “so that businesses, individuals and government institutions can be tracked and evaluated for trustworthiness… The program is mainly focused on businesses, and is very fragmented, contrary to the popular misconceptions that it is focused on individuals and is a centralized system.” A single omniscient, all-wise controller is unlikely.

The futility of “trusting the machine” as a source of unimpeachable legitimacy is nowhere more obvious than in China, where the state openly tells the machine what to think, avoiding the BlenderBot 3 scenario. The BBC reports that Chinese internet giants were ordered to hand over their algorithm data to the government. “Each one of these algorithms has been given a registration number, so the CAC can focus enforcement efforts on a particular algorithm. The question is, what is the next step to seeing if an algorithm is up to code?”

Here all pretense of scientific wisdom from beyond human ken is abandoned. The Chinese Communist Party’s legal code is the Turing Oracle, the extra Godelian axiom, the external influence to which the machine must bend, the diktat from on high. Woke would probably be the corresponding code in the West. The World Economic Forum describes “a new credit card that monitors the carbon footprint of its customers – and cuts off their spending when they hit their carbon max.” The truth of global warming is a given, and woe unto the deniers. Machiavelli’s dictum, “it is far safer to be feared than loved if you cannot be both” comes to mind.

In short, AI may not provide a solution to the problem of loss of institutional trust posed in Part One by supplying the slogan “trust the AI” in place of the shopworn “trust the experts”. Rather it will replace trust with fear. The phrase “fear the experts” bids fair to become the watchword of the mid-21st century.

Fortunately for the public, there will be multiple systems, some in conflict or competition with the others. No Skynet will rule the world in the immediate future. The landscape of AI and institutions may resemble this:

- Narrowly focused AI applications will become common in industry, research, consumer appliances, etc but general-purpose artificial intelligence will be largely confined to a few government-affiliated mega projects.

- AI-enhanced political coalitions will be formed, differentiated according to the foundational axioms of their creators. Debates among factions will become more philosophical, civilizational, and general in nature, as the machine can work out the details. These debates will be the new ideological fault lines of the world.

- Data, and the connectivity that collects and distributes it, will be among the most valuable things on earth as these are the raw material of new discoveries and innovation. Who controls data will be the primary determinant of the survival of individual privacy, liberty, and creativity.

In that technologically assisted human future, the eternal questions will be revisited with a vengeance. All the issues considered settled in the premature End of History following the conclusion of Cold War 1 will be reopened, not just about who the new victor in the second war will be but everything people have ever wondered about, with the boost of our augmented tool kit.

But likelier than not, human ambition will use the opacity of AI to create predictions to amass power. “During the pandemic, public health rearranged society to control a single metric — covid cases — based on a lab test.” The capacity of technology to deduce the truth will be matched by its ability to foster self-deception. Impelled by the hidden hand of its masters the machine can be spectacularly wrong and generate coalitions against it. It will be my black box against your black box. Thus in the coming years, albeit with new tools, the tale of history, far from ending in a singularity, will be renewed.

Books: Gaming AI: Why AI Can’t Think but Can Transform Jobs by George Gilder. Pointing to the triumph of artificial intelligence over unaided humans in everything from games such as chess and Go to vital tasks such as protein folding and securities trading, many experts uphold the theory of a “singularity.” This is the trigger point when human history ends and artificial intelligence prevails in an exponential cascade of self-replicating machines rocketing toward godlike supremacy in the universe.

Join the conversation as a VIP Member